Let us now move to repeated moral hazard relationship. The main goal of this section is to show how the past history of performances may help the princi- pal to relax incentive compatibility constraints, even when there is no correlation between shocks in different periods.

1. The Model

We will come back to the moral hazard framework of chapter 4. For now, we assume that the relationship between the principal and the agent is repeated for two periods. The risk-averse agent has an intertemporal utility given by ![]()

![]() , where ti (resp. ei) is the agent’s transfer (resp. effort) at date i. Again, we assume that ei belongs to {0, 1}, with disutilities normalized as usual because ψ(1) = ψ and ψ(0) = 0. In each period, the agent’s effort yields a stochastic return π

, where ti (resp. ei) is the agent’s transfer (resp. effort) at date i. Again, we assume that ei belongs to {0, 1}, with disutilities normalized as usual because ψ(1) = ψ and ψ(0) = 0. In each period, the agent’s effort yields a stochastic return π![]() . We denote

. We denote ![]() . Stochastic returns are independently distributed over time, so that the past history of realizations does not yield any information on the current likelihood of a success or a failure of the production process. As usual, the principal is risk-neutral and has a separable utility function

. Stochastic returns are independently distributed over time, so that the past history of realizations does not yield any information on the current likelihood of a success or a failure of the production process. As usual, the principal is risk-neutral and has a separable utility function ![]() .

.

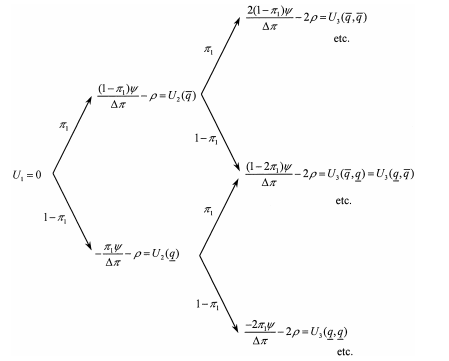

Figure 8.3: Timing with a Twice-Repeated Moral Hazard Problem

In this two-period environment, the principal offers a long-term contract to the agent. In full generality, this contract involves transfers at each date that are contingent on the whole past history of outcomes. Typically, a long-term contract writes as ![]() , where q˜1 and q˜2 are output realizations in periods 1 and 2, respectively. Such a contract thus stipulates 2 + 2 × 2 = 6 possible trans- fers, depending on the realizations of outcomes. For simplicity of notation, we use

, where q˜1 and q˜2 are output realizations in periods 1 and 2, respectively. Such a contract thus stipulates 2 + 2 × 2 = 6 possible trans- fers, depending on the realizations of outcomes. For simplicity of notation, we use ![]() to denote the first-period transfers. Similarly,

to denote the first-period transfers. Similarly, ![]() and

and ![]() denote transfers in the second period. As usual, the description of partici- pation and incentive constraints is easier when one introduces the new variables

denote transfers in the second period. As usual, the description of partici- pation and incentive constraints is easier when one introduces the new variables ![]() .

.

For further references, the timing of contracting is shown in figure 8.3.

Remark: The reader will have recognized the framework of a mul- titask moral hazard problem along the lines of that presented in chapter 5. There are two main differences. First, the sequentiality of actions that are now taken at two different dates implies that payments also take place at two different dates. Second, the separability of the agent’s disutility of efforts over time will allow a somewhat simpler characterization of the optimal contract, as we will see below.

2. The Optimal Long-Term Contract

We focus on the case where effort is extremely valuable for the principal, who always wants to implement a high level of effort in both periods. We can thus describe the second-period incentive constraints as

![]()

In full generality, these constraints obviously depend on the first-period level of output q1, i.e., on the history of past performances.

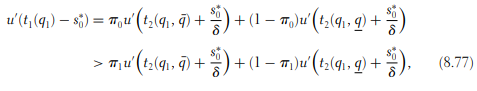

Let us move backward. In period 1, the agent anticipates his future stream of random payoffs to evaluate the current benefit of exerting a first-period effort or not. The first-period incentive constraint is written as

The terms u¯1 and u1 represent the current utility gains associated with the transfers received by the agent in period 1 depending on the realized production. The terms ![]() represent the discounted expected utility gains associated with the transfers received by the agent in period 2 following each possible first-period output. Clearly, these continuation payoffs affect the first-period incentives to exert effort.

represent the discounted expected utility gains associated with the transfers received by the agent in period 2 following each possible first-period output. Clearly, these continuation payoffs affect the first-period incentives to exert effort.

Finally, the agent accepts the long-term contract before q˜1 and q˜2 realize. His intertemporal participation constraint is written as

Denoting the inverse function of the agent’s utility function, again by h = u−1, the problem that the principal must solve is

Solving this problem highlights the particular role played by the agent’s con- tinuation payoffs for the second period following the first-period realization q1, namely ![]() . If the agent has been promised an expected second-period utility u2(q1), the levels of utility

. If the agent has been promised an expected second-period utility u2(q1), the levels of utility ![]() must satisfy the second-period participation constraints

must satisfy the second-period participation constraints

![]()

It is important to look at (8.32) and (8.33) to observe that the first-period incentives to exert effort, and the decision whether to accept the long-term con- tract, depend on what occurs in the second period only through the values of the continuation payoffs u2(q1). They do not depend on how the principal chooses ![]() . This flexibility only matters to provide the second-period incen- tives to exert effort.

. This flexibility only matters to provide the second-period incen- tives to exert effort.

Given the promise made by the principal (which is credible because of our assumption of full commitment) of a future utility u2(q1) for the agent following a first-period output q1, the continuation contract for the second period solves the problem below:

We denote the value of problem (P2(q1)) by V2(u2(q1)). This is the principal’s second-period payoff, when he has promised a level of utility u2(q1) to the agent. This problem is almost the same as the static problem of chapter 4 (section 4.4), and its solution can be derived similarly. The only difference is that the agent receives the promise of a second-period utility u2(q1) when the first-period output is q1 instead of zero, as in the static model of chapter 4. Applying the same techniques as in chapter 4, it is straightforward to show that both constraints (8.31) and (8.34) are binding at the optimum. Hence, we can compute the second-period agent’s payoffs in both states of nature as

where the superscript D means dynamics.

This yields the following expression of the second-best cost ![]() of implementing a high effort in period 2 following the promise of a second-period utility u2(q1):

of implementing a high effort in period 2 following the promise of a second-period utility u2(q1):

Finally, the continuation value of the contract for the principal can be defined as

![]()

For further references, note also that ![]() .

.

These optimal continuations of the contract for the second period being defined, we can now move backward to solve for the optimal long-term contract. Taking into account the expressions above, the principal’s problem (P ) can be rewritten as

where (8.39) is the first-period incentive constraint and (8.40) the agent’s intertem- poral participation constraint. Both constraints are rewritten as functions of the expected continuation payoffs u2(q¯) and u2(q) that the agent will get in period 2 following each possible first-period output. Let us introduce the respective multi- pliers λ and µ. Note that (P’) is a concave problem with linear constraints defining a nonempty constrained set for which the Kuhn and Tucker first-order conditions are necessary and sufficient to characterize optimality. Optimizing with respect to u¯1 and u1 yields, respectively,

Summing those two equations, we obtain

![]()

and thus the agent’s intertemporal participation constraint (8.40) is necessarily binding.

Also, from (8.41) and (8.42) we immediately get

![]()

Optimizing with respect to u2(q¯) and u2(q) yields

Hence, we have another way of writing the multiplier λ:

![]()

Direct identifications of (8.41) with (8.45) and of (8.42) with (8.46) yield, respectively,

Those two equations show that the following martingale property must be satisfied at the optimum:

![]()

where ![]() denotes the expectation operator with respect to the distribution of second-period output q˜2 induced by a high effort at this date, and

denotes the expectation operator with respect to the distribution of second-period output q˜2 induced by a high effort at this date, and ![]() is the random value of second-period utilities.

is the random value of second-period utilities.

This martingale property shows that the marginal cost of giving up some rewards to the agent in period 1 following any output q1 must be equal to the marginal cost of giving up these rewards in the corresponding continuation of the contract. This property is rather important. It says that, because of the agent’s risk aversion, the principal spreads the agent’s rewards and punishments intertemporally to minimize the cost of implementing a high effort in period 1. To give all rewards and punishments necessary to induce effort in period 1 in this period only is clearly suboptimal. The principal prefers to smooth the burden of the incentive constraint between today and tomorrow.

Moreover, because h ≥ 0 and ![]() is increasing,11 (8.47) implies that

is increasing,11 (8.47) implies that ![]() . But the equality is impossible, because from (8.48) and (8.49) it would be implied that

. But the equality is impossible, because from (8.48) and (8.49) it would be implied that ![]() , and (8.39) would be violated. Since

, and (8.39) would be violated. Since ![]()

![]() , we have necessarily λ > 0 from (8.47) and the agent intertemporal incen- tive constraint (8.39) is binding. Since we have

, we have necessarily λ > 0 from (8.47) and the agent intertemporal incen- tive constraint (8.39) is binding. Since we have ![]() , the high first-period output is not only rewarded in period 1 but also in period 2. The optimal long-term contract with full commitment exhibits memory. Note that this memory property implies more generally that the first period payments and their expected continuations covary positively.

, the high first-period output is not only rewarded in period 1 but also in period 2. The optimal long-term contract with full commitment exhibits memory. Note that this memory property implies more generally that the first period payments and their expected continuations covary positively.

The main features of the optimal contract are summarized in proposition 8.4, which provides a useful benchmark with which we can assess the impact of various limitations that the principal may face in contracting with the agent in a long-term relationship.

Proposition 8.4: With a twice-repeated moral hazard problem, the opti- mal long-term contract with full commitment exhibits memory, and the martingale property ![]() is satisfied.

is satisfied.

To get further insights on the structure of the agent’s payments in a long- term relationship, let us come back to our usual quadratic example and assume that ![]() for some r > 0 and

for some r > 0 and![]() . The martingale property (8.50) immediately yields

. The martingale property (8.50) immediately yields ![]() , for all q1 in {q, q¯}. Inserting those equalities into (8.39) and (8.40), which are binding yields, respectively,

, for all q1 in {q, q¯}. Inserting those equalities into (8.39) and (8.40), which are binding yields, respectively, ![]() .

.

Finally, the structure of the payments at each date can be fully derived as

The values of these transfers immediately highlight two phenomena. First, compared with a static one-shot relationship, the first-period power of incentives needed to induce a first-period effort is lower. A factor ![]() strictly less than 1 reduces the risk borne by the agent during this first period in order to induce effort at this date. These formula are also useful for highlighting the fact that as the future matters more (δ large) the risk borne during the first period diminishes and more risk is shifted to the second period. Second, an early success (resp. failure) is translated into future compensations that are shifted upward (resp. downward). This captures the effect of the past history of performances on future compensa- tions.

strictly less than 1 reduces the risk borne by the agent during this first period in order to induce effort at this date. These formula are also useful for highlighting the fact that as the future matters more (δ large) the risk borne during the first period diminishes and more risk is shifted to the second period. Second, an early success (resp. failure) is translated into future compensations that are shifted upward (resp. downward). This captures the effect of the past history of performances on future compensa- tions.

Lambert (1983), Malcomson and Spinnewyn (1988), and Rogerson (1985b) all showed that the optimal long-term contract exhibits memory. Rey and Salanié (1990) showed how long-term contracts can gen- erally be replaced by two-period short-term contracts in a framework with T periods. Chiappori et al. (1994) offered an interesting survey of the literature.

3. Renegotiation-Proofness

Importantly, the recursive procedure that we have used in section 8.2.2 to compute the optimal long-term contract shows that it is in fact sequentially optimal. Given the promise u2(q1) made by the principal following a first-period outcome q1, there is no point in the principal offering a contract other than the continuation for the second period of the optimal long-term contract above. By definition, the optimal long-term contract is thus renegotiation-proof.

4. Reneging on the Contract

In the previous analysis, we have assumed that the agent commits himself to stay always in the relationship with the principal once he has accepted the long-term contract. The optimal contract with full commitment has only a single participa- tion constraint. This contract forces the agent to stay in the relationship in period 2, even if his expected payoff for this period is negative. This is a rather strong assumption on the enforcement of a contract. Suppose now that the agent cannot commit himiself to staying in a relationship if he gets less than his reservation value normalized at zero. To avoid any breach of contract, the continuation pay- offs u2(q1) following a first-period output q1 must now satisfy the second-period participation, or renegation-proofness, constraints

![]()

Considering the possibility of a breach of contract therefore puts more constraints on the principal’s problem (P r). If the principal was unconstrained, he would like to decrease u2(q) and increase ![]() , because playing on future promises also helps to provide first-period incentives, as (8.40) has already shown us. However, diminishing u2(q) to its optimal value with no renegation on the contract conflicts with the second-period participation constraints (8.57). Indeed, using (8.55) and (8.56), we observe that the optimal long-term contract always violates the constraint (8.57), because

, because playing on future promises also helps to provide first-period incentives, as (8.40) has already shown us. However, diminishing u2(q) to its optimal value with no renegation on the contract conflicts with the second-period participation constraints (8.57). Indeed, using (8.55) and (8.56), we observe that the optimal long-term contract always violates the constraint (8.57), because ![]()

![]() . Hence, when the agent can walk away from the relationship in the second period, the constraint (8.57) must be binding following a low first-period output. The principal is then strongly limited in the second-period punishment he can inflict on the agent following this history of the game.

. Hence, when the agent can walk away from the relationship in the second period, the constraint (8.57) must be binding following a low first-period output. The principal is then strongly limited in the second-period punishment he can inflict on the agent following this history of the game.

The optimal renegation-proof contract entails that (8.57) is binding for q1 = q. Following such a low first-period output, it should be clear that the continuation contract is the replica of the static contract found in chapter 4. Let us now derive the other components of the long-term contract, i.e., the levels of utilities in the first-period contract and in the second period following a high first-period con- tinuation output q1 = q¯. The intertemporal incentive constraint is now written as

![]()

Similarly, the intertemporal participation constraint is obtained as

![]()

Taking into account the binding renegation-proofness constraint u2(q) = 0, the principal’s problem can be rewritten as

We index the solution to this problem with a superscript R meaning renegation-proof. Now we denote the respective multipliers of the two constraints (8.58) and (8.59) by λ and µ. The corresponding first-order conditions obtained by optimizing with respect to ![]() are written as

are written as

where ![]() are the optimal payoffs in the first period, and

are the optimal payoffs in the first period, and ![]() is the optimal continuation payoff following a high output in the first period.

is the optimal continuation payoff following a high output in the first period.

Summing (8.60) and (8.61), we obtain ![]() , and the participation constraint (8.59) is again binding. Inserting this value of µ into (8.60), we finally get

, and the participation constraint (8.59) is again binding. Inserting this value of µ into (8.60), we finally get ![]() . Using (8.60) and (8.62), we also obtain

. Using (8.60) and (8.62), we also obtain

This equation is again a martingale property, which now applies only follow- ing a first-period success. Smoothing the rewards for a first-period success between the two periods calls for equalizing the marginal cost of rewarding the agent in period 1 with its future expected value in period 2. Using the quadratic specifica- tion of h(·), the martingale property (8.63) is rewritten as

![]()

As an exercise, we leave it to the reader to check that λ> 0.

Using (8.64) with the binding constraints (8.58) and (8.59) yields a linear system with three unknowns and three equations. Solving this system, we first obtain the following expressions of the agent’s payoff in each state of nature:

Taking into account that ![]() , we also get

, we also get

Comparing these expressions of the payoffs with those obtained when the agent cannot leave the relationship in period 2, we can state proposition 8.5.

Proposition 8.5: Assuming that h(·) is quadratic with a twice-repeated moral hazard problem and a renegation-proofness constraint, the opti- mal long-term contract is the same as with full commitment, except for the payoffs corresponding to a low first-period output: ![]()

![]() .

.

The renegation-proofness constraint (8.57) quite significantly affects the struc- ture of the agent’s payoffs following a low first-period output. However, the optimal long-term contract still exhibits memory and tracks the agent’s performances over time, as with full commitment. An early success implies some greater rewards later on. Because the principal can no longer spread a punishment following a low first-period output q1 = q between periods 1 and 2, all this punishment must be inflicted on the agent in the first-period.12 Following such a low first-period output, the agent receives the optimal static contract in period 2, which corresponds to a zero participation constraint.

One-sided lack of commitment was studied initially by Lambert (1983), who showed that the memory result still holds in this frame-work. Atkeson (1991) analyzed the infinitely repeated contractual model with one-sided commitment. Thomas and Worrall (1988) provided an analysis of the optimal long-term contract with two-sided lack of commitment in an infinitely repeated relationship without moral hazard.

5. Saving

In the framework of section 8.2.2, we have assumed that the principal has the full ability to restrict the agent’s access to the capital market. We have not allowed the agent either to save or to borrow. All the transfers received were immediately consumed. This seems a rather strong assumption, in particular, given the fact that the agent would like to save a positive amount in the first period, when he receives the second-best optimal long-term contract that we have described in proposition 8.4. To see this point, consider the impact of saving an amount s in the first period. The agent’s expected utility is thus written as ![]() denotes the expectation operator with respect to the second-period production induced by a high effort. In a perfect credit market, the interest rate is

denotes the expectation operator with respect to the second-period production induced by a high effort. In a perfect credit market, the interest rate is ![]() . Marginally increasing s above zero improves the agent’s intertemporal utility whenever

. Marginally increasing s above zero improves the agent’s intertemporal utility whenever

![]()

Because the optimal contract satisfies the martingale property (8.50), we also have ![]() , where the last inequality is obtained by applying Jensen’s inequality to the strictly convex function

, where the last inequality is obtained by applying Jensen’s inequality to the strictly convex function ![]() . Finally, using

. Finally, using ![]() , the strict inequality (8.71) holds, and a positive saving is thus optimal.

, the strict inequality (8.71) holds, and a positive saving is thus optimal.

This positive saving perturbs the agent’s incentives to exert effort in the second period. Indeed, because the second period transfers ![]() are such that the second-period incentive constraints are binding, a positive saving that decreases the marginal utility of income makes the agent prefer a low effort. This calls for a careful reoptimization of the principal’s problem.

are such that the second-period incentive constraints are binding, a positive saving that decreases the marginal utility of income makes the agent prefer a low effort. This calls for a careful reoptimization of the principal’s problem.

More generally, when the saving made by the agent is nonobservable by the principal, it plays the role of another moral hazard variable that can only be indirectly controlled by the principal through the long-term contract they offer.

Let us now characterize some features of the optimal contract with sav- ing. First, note that, given a first-period output q1 and any long-term contract ![]() , the agent chooses to save an amount s∗(q1), such that it equal-izes his marginal utilities of income in both periods:

, the agent chooses to save an amount s∗(q1), such that it equal-izes his marginal utilities of income in both periods:

![]()

In computing the expectation above, we have assumed that the agent antic- ipates that he will exert a high effort in period 2 so that the probability that q˜2 is equal to q¯ is π1. Of course the choice of effort in the continuation is in fact endogenous and depends on how much the agent would like to save. We will come back to this issue below.

By shifting income from one period to the other, the agent is able to play on the incentive power of the long-term contract he receives from the principal. Now let us imagine that the principal replaces this initial contract by a new long-term contract ![]() , which is designed to replicate the agent’s choice and the final allocation of utilities that the latter gets in each state of nature. This new contract should thus satisfy

, which is designed to replicate the agent’s choice and the final allocation of utilities that the latter gets in each state of nature. This new contract should thus satisfy ![]() and

and ![]() . With this new contract, the marginal utilities of income are the same in both periods, because by definition

. With this new contract, the marginal utilities of income are the same in both periods, because by definition

![]()

The agent chooses neither to save nor to borrow. Moreover, the intertemporal costs of both contracts are the same for the principal, since

Hence, there is no loss of generality in restricting the principal to offer saving- proof long-term contracts.

The saving-proofness constraint, however, requires that the following martin- gale property, obtained from (8.73), be satisfied:

![]()

(8.75) significantly constrains the set of implementable allocations and raises the agency cost of implementing a high effort. The principal can no longer spread the future expected payoffs u2(q¯) and u2(q) as he would like in order to facili- tate the first-period provision of incentives without inducing saving.

However, the martingale property (8.75) is not the only constraint on the principal’s problem. Indeed, it would be the case if the stochastic production pro- cess in period 2 were completely exogenous. However, under moral hazard, the choice of effort and the stochastic production process in this second period are also endogenous—it depends, in a rather complex way, on how much the agent has saved in the first period and on the current contract. This means that, if the principal can be restricted to saving-proof long-term contracts on the equilib- rium path, this restriction is no longer valid when the agent decides to change his first-period saving so that he prefers exerting a low effort in the second-period continuation. Given a long-term contract ![]() , which is saving-proof on the equilibrium path, the agent, by saving s0 and exerting no effort in period 2, gets

, which is saving-proof on the equilibrium path, the agent, by saving s0 and exerting no effort in period 2, gets ![]() .

.

To induce the agent to exert effort in period 2, the following incentive con- straint must be satisfied by a saving-proof long-term contract:

Let us consider a second-period contract, such that ![]() for any q1 in

for any q1 in ![]() . It should be noted that s0∗, defined as the maximizer of the right-hand side above, is such that

. It should be noted that s0∗, defined as the maximizer of the right-hand side above, is such that

since u‘(·) is decreasing and π1 > π0. Using the fact that the agent’s objective function is concave in s and maximized at zero saving when a positive effort is exerted in the second period, we can conclude that s0∗ > 0.

Remark: It should be also noted that this double deviation along both the saving and the effort dimension introduces a positive slack into the second-period incentive constraint. Indeed, the right-hand side of (8.76) is strictly greater than what the agent can get by not saving at all and exerting no effort, namely ![]()

![]() . Simplifying, we finally get

. Simplifying, we finally get

![]()

This strict inequality implies that the optimal contract with full com- mitment is not sequentially optimal when saving is allowed. Indeed, the full commitment optimal contract requests that the second-period incentive constraint is slack, as shown on (8.78). However, once the decision of not saving has been made by the agent, and once the sec- ond period comes, the principal no longer has any reason to leave such a slack. The principal would like to renegotiate and offer a new contract such that second-period incentive constraints are binding.

The general lesson of this section is that allowing saving and borrowing may significantly constrain what can be achieved by a long-term contract. Moreover, it introduces much complexity into the analysis.

The design of the optimal contract with saving and noncommitment is rather complex. Chiappori et al. (1994) provided some insights into the structure of the solution. Things are simpler with full commitment. Mal-comson and Spinnewyn (1988) showed that the optimal long-term contract can be replicated by a sequence of optimal static contracts when the agent’s saving decision can be controlled by the principal. Fudenberg, Holmström, and Milgrom (1990) obtained a similar result in the case where the agent has additively separable CARA preferences, where effort is counted in mon- etary terms, and where the production technology is common knowledge. They showed that the associated one-period contract corresponds to the opti- mal static contract offered to an agent with a lower degree of absolute risk aversion.

6. Infinitely Repeated Relationship

The two-period model is a highly stylized view of a long-term principal-agent relationship. Financial contracts, labor contracts, and tenancy contracts are most often enduring relationships lasting for a long period of time. Let us move now to an infinite horizon model extending the basic framework of section 8.2.2.14 As we will see, the design of the optimal long-term contract still exhibits many of the features of our two-period example. The novelty is that the second period is no longer the end of the relationship. All periods are alike, and the principal faces a similar problem of control in each period. It is rather intuitive to see that the whole structure of the contract is now solved recursively. Given an initial promise of utility from any period on, the principal computes an optimal contract that stipulates not only the agent’s current payments but also determines what are the utility levels that are promised from that period on, following each current realization of the production process. Then, the continuation of the optimal contract for the next period on is similar to the contract itself. Hence, the whole contract can be computed recursively.

The recursive structure of the optimal contract also implies that, at any given date, the contract depends on the whole history of past outcomes only through the utility level promised following such a history. This utility level can be viewed as a stochastic state variable that summarizes the past history of the agent’s perfor- mances. Therefore, the optimal contract exhibits a Markov property.

To describe better the optimal contract, let us denote the value function associated with the following dynamic programming problem by V (·):

V(U) is the value of the principal’s problem (P ) in an infinitely repeated relationship with moral hazard, assuming that the principal wants to induce a high effort in each period and promise an expected utility level U to the agent over the whole relationship.16 Note that the principal must not only stipulate the current payments of the agent but also the levels of future utilities U¯ and U , which are promised in the continuation of the contract following the respective realizations of q¯ and q. The constraints (8.79) and (8.80) are, respectively, the incentive and participation constraints when an expected payoff U has been promised to the agent. These constraints make the role of these continuation payoffs explicit. Given that the principal has promised an expected level of utility U to the agent at a given period, he can get the expected payoff V (U ) from that period on. By offering the continuation payoffs U and U¯ the principal knows, by the mere definition of the value function V (·), that he will get himself the continuation payoffs V (U ) and V (U¯).

Let us denote the respective multipliers of the constraints (8.79) and (8.80) by h and µ. Assuming the concavity of the value function V (·),17 the optimizations with respect to U¯ and U yield respectively

where we make explicit the dependence of the solution on the level of promised utility U .

Summing these two equations, we obtain also

![]()

where E(·) is the expectation operator with respect to the distribution of current output induced by a high effort and U˜(U ) is the random continuation utility promised to the agent.

Optimizing with respect to u¯ and u also yields

Summing these two equations, we finally get

![]()

where u˜(U ) is the random current utility level. Hence, the participation constraint (8.80) is necessarily binding.

From (8.84) and (8.85), we also derive the following definition of h:

Because h(·) is convex and V (·) is concave, (8.87) implies that ![]() if and only if

if and only if ![]() . To satisfy (8.79), it cannot be that

. To satisfy (8.79), it cannot be that ![]() and

and ![]() hold simultaneously. Hence, we necessarily have

hold simultaneously. Hence, we necessarily have ![]() and

and ![]() .

.

The economic interpretation of this condition is clear. In an infinitely repeated relationship, the optimal long-term contract again exhibits the memory property. A good performance today is not only rewarded today but also gives the agent a greater continuation payoff. The explanation is the same as in the two-period model of section 8.3.2. To smooth the agency costs of the relationship with the agent, the principal spreads the agent’s rewards and punishments between the current period and its continuation, which now involves the whole future of their relationship.

Moreover, using the Envelope Theorem, we also have V’(U ) = −µ. Hence, the marginal value function satisfies the martingale property:

![]()

This property characterizes how the principal intertemporally smoothes the agent’s reward over time in such a way that one more unit of utility promised today costs him exactly what the principal gains from having less to promise tomorrow, following any realization of the output. This property generalizes what we have seen in section 8.2.2 to the case of an infinitely repeated relationship.

To make further progress toward the characterization of the optimal contract, we will assume that ![]() for all u in R. This corresponds to a utility function u(·) that is given by

for all u in R. This corresponds to a utility function u(·) that is given by ![]() , which is defined for all t ≥

, which is defined for all t ≥ ![]()

This specification of the inverse utility function suggests that we should look for a value function of the kind ![]() belongs to R3 with β ≥ 0. Note that V (·) is then decreasing (V‘(U ) = − exp(βU + γ) ≤ 0) and concave (V”(U ) = −β exp(βU + γ) ≤ 0).

belongs to R3 with β ≥ 0. Note that V (·) is then decreasing (V‘(U ) = − exp(βU + γ) ≤ 0) and concave (V”(U ) = −β exp(βU + γ) ≤ 0).

Using (8.81) and (8.84), we immediately find that

![]()

or to put it differently,

![]()

Similarly, using (8.82) and (8.85), we obtain

The general discussion above has shown that the constraints (8.79) and (8.80) are both binding. This yields the following expressions of u¯(U ) and u(U ):

Inserting those expressions into (8.90) and (8.92) yields, respectively,

![]()

and

With our specification for h(·), the martingale property (8.88) is rewritten as:

![]()

Inserting (8.95) and (8.96) into (8.97) and identifying the right-hand and left-hand sides for all values of U yields

Finally, we get the following expressions of β and γ:

These expressions yield the following laws of motion for the expected utility from any period on:

Moreover, the current payoffs are then obtained from (8.93), (8.94), (8.102), and (8.103) as

The laws of motion (8.102) and (8.103) are interesting, because they show that the optimal contract still exhibits the memory property in an infinitely repeated relationship. A success in any period moves up the agent’s expected utility from this period on and U¯(U ) > U . A failure moves it down, U(U ) < U . However, the upward shift is smaller than the downward shift, which creates a tendency for expected utility to move downward, as we will discuss below.

Note that as ‘ goes to one, the following Taylor approximation holds:

As ‘ goes to one, whatever his degree of risk aversion, the agent behaves almost as a risk-neutral agent being incentivized by a reward (in utility terms)(1 – ![]() following a success and a punishment

following a success and a punishment ![]() following a failure. This already suggests that the agency cost may not be too important when the future is not discounted too much.

following a failure. This already suggests that the agency cost may not be too important when the future is not discounted too much.

To find the behavior of the value function as ‘ goes to one, let us now determine the last parameter α. Using the definition of V (·), we have

where (u¯(U ), u(U )) are given by (8.104) and (8.105) and ![]() are given by (8.102) and (8.103). Inserting these latter expressions in (8.108) and simplifying yields, after some tedious computations,

are given by (8.102) and (8.103). Inserting these latter expressions in (8.108) and simplifying yields, after some tedious computations,

![]()

Finally, the value function is written as

Normalizing the length of the period by 1 − δ, the per-period value function is written as

When δ converges to one, γ converges towards rψ and the per-period value function uniformly converges on all compact intervals of U toward

![]()

which is the first-best profit of the principal inducing a positive effort.

Proposition 8.6: As ‘ goes to one, the principal’s per-period expected profit in an infinitely repeated relationship with moral hazard converges towards its first-best value.

The intuition for this result is straightforward. Recall from chapter 4 that the source of inefficiency in a static moral hazard problem is the fact that the principal must let the risk-averse agent bear some risk. The principal benefits from the repetition of the game, because he can spread the agent’s rewards and punishments over time and let the agent only bear a small fraction of the risk associated with his current effort in any given period. This risk is proportional to the length of the period 1 − δ. When δ is close to one, the risk borne by the agent in each period is thus made arbitrarily close to zero, as can be seen easily by computing the difference ![]() , which converges towards 0 as δ goes to 1. Therefore, the cost of moral hazard in a given period almost disappears, and the first-best level of profit is achieved when δ is close enough to one.

, which converges towards 0 as δ goes to 1. Therefore, the cost of moral hazard in a given period almost disappears, and the first-best level of profit is achieved when δ is close enough to one.

Another interpretation of this result should be stressed. As the contractual relationship is repeated, the risk-averse agent is subject to many independent risks that arise at different points in time. The principal structures the intertemporal contract of the agent to let him become perfectly diversified. As a result of this complete diversification, the agent is almost risk-neutral, and the first-best outcome can be obtained just as in a static model with risk neutrality. In this sense, one can say that the infinitely repeated relationship is a good substitute to self-insurance if the relationship is repeated often enough.

Finally, it is interesting to characterize how the distribution of utilities that the agent gets after i periods evolves. Given that (8.102) and (8.103) both hold, the utility ![]() , which is promised to the agent from any date t = i on, is such that

, which is promised to the agent from any date t = i on, is such that ![]()

![]() denotes the expectations of the output at date t = i + 1 when the agent exerts a positive effort in this period and

denotes the expectations of the output at date t = i + 1 when the agent exerts a positive effort in this period and ![]()

![]() is strictly positive. Using the Law of Iterated Expectations, we get

is strictly positive. Using the Law of Iterated Expectations, we get ![]() p. Proceeding recursively, we finally obtain

p. Proceeding recursively, we finally obtain ![]() , where U0 = 0 is the agent’s reservation utility at the start of the relationship and

, where U0 = 0 is the agent’s reservation utility at the start of the relationship and ![]() is the whole history of past outcomes up to date t = i. In particular, we have

is the whole history of past outcomes up to date t = i. In particular, we have

![]()

which converges towards minus infinity as i goes to infinity. The expected utility of the agent from any period on decreases linearly over time, pushing the agent into regions where continuation payoffs are very likely to be negative. The assumption that the agent cannot leave the relationship is thus crucial for the result where the first-best outcome can be approximatively implemented as δ goes to one.

This property is also useful when computing the whole distribution of expected utilities Ui from any period i on. The laws of motion are given by (8.102) and (8.103). Figure 8.4 below explains how this distribution evolves over time.

It is straightforward to observe that the future expected utility U(hi) following any history hi depends only on the number of high outcomes q¯ that have been realized up to date t = i. Assuming n realizations of q¯ in a given history hi, we have ![]() is the agent’s reward when a high output realizes and

is the agent’s reward when a high output realizes and ![]() is his punishment following a low output. Note that the probability that such a history with n high outcomes up to period i takes place is

is his punishment following a low output. Note that the probability that such a history with n high outcomes up to period i takes place is ![]() , the probability of n successes in an i-Bernoulli trial.

, the probability of n successes in an i-Bernoulli trial.

It is also interesting to note that the distribution of ![]() converges in law toward a normal distribution. From a direct application of the Central Limit Theorem we have indeed

converges in law toward a normal distribution. From a direct application of the Central Limit Theorem we have indeed

![]()

where N(0, 1) is a normal distribution with zero mean and unit variance. This convergence suggests that the utility levels promised to the agent from any date on are unlikely to diverge too much away from the downward drift as the relationship becomes infinitely long.

Figure 8.4: Distribution of Future Expected Utilities up to Period 3

The structure of the incentive scheme is also easily obtained from the struc-ture of the utilities. If there have been n successes up to date t = i, the incentive scheme is such that

As the number of periods increases, the downward drift of utility toward minus infinity implies that transfers get closer and closer to their lowest possible bound ![]()

Spear and Srivastava (1987) were the first to state the infinitely repeated moral hazard problem as a recursive problem. Using the first-order approach, they focused on the case of a continuum of possible levels of effort and found many difficulties in the characterization of the optimal contract. They also proved the Markov property of the optimal contract. At a more abstract level, repeated principal-agent relationships are examples of repeated games with strategies based on public information, namely the history of past performances. The earlier contributions to the repeated principal-agent literature were precisely cast in a theoretic game setting. Rubinstein (1979) showed that the first-best effort can also be imple- mented when agents do not discount the future by the use of so-called review strategies. Such a strategy punishes the agent’s deviations when he no longer exerts the first-best level of effort if those deviations are statistically detectable. Radner (1985) also used review strategies in the case of dis- counting. These latter papers were not interested in computing the optimal dynamic contract, but they already showed that a repeated relationship could alleviate much of the agency problem. Radner, Maskin, and Myerson (1986) provided an example (involving team production, not a single agent) such that efficiency is lost even when the common discount factor ‘ goes to one. The general theory of repeated games with public information is due to Fudenberg, Levine, and Maskin (1994). They derived sufficient conditions on the information structure to ensure first-best implementation when ‘ goes to one. They devoted a whole section to the case of principal-agent models and compared their approach, which was based on dynamic programming, with that used by Radner (1985), Rubinstein (1979), and Rubinstein and Yaari (1983). In the context of insurance against income shocks, i.e., in an adverse selection context, Green (1987), Thomas and Worrall (1990), Atkeson and Lucas (1992), and Phelan (1994) have computed explicit expressions for the value function for some specifications of preferences.a

Source: Laffont Jean-Jacques, Martimort David (2002), The Theory of Incentives: The Principal-Agent Model, Princeton University Press.